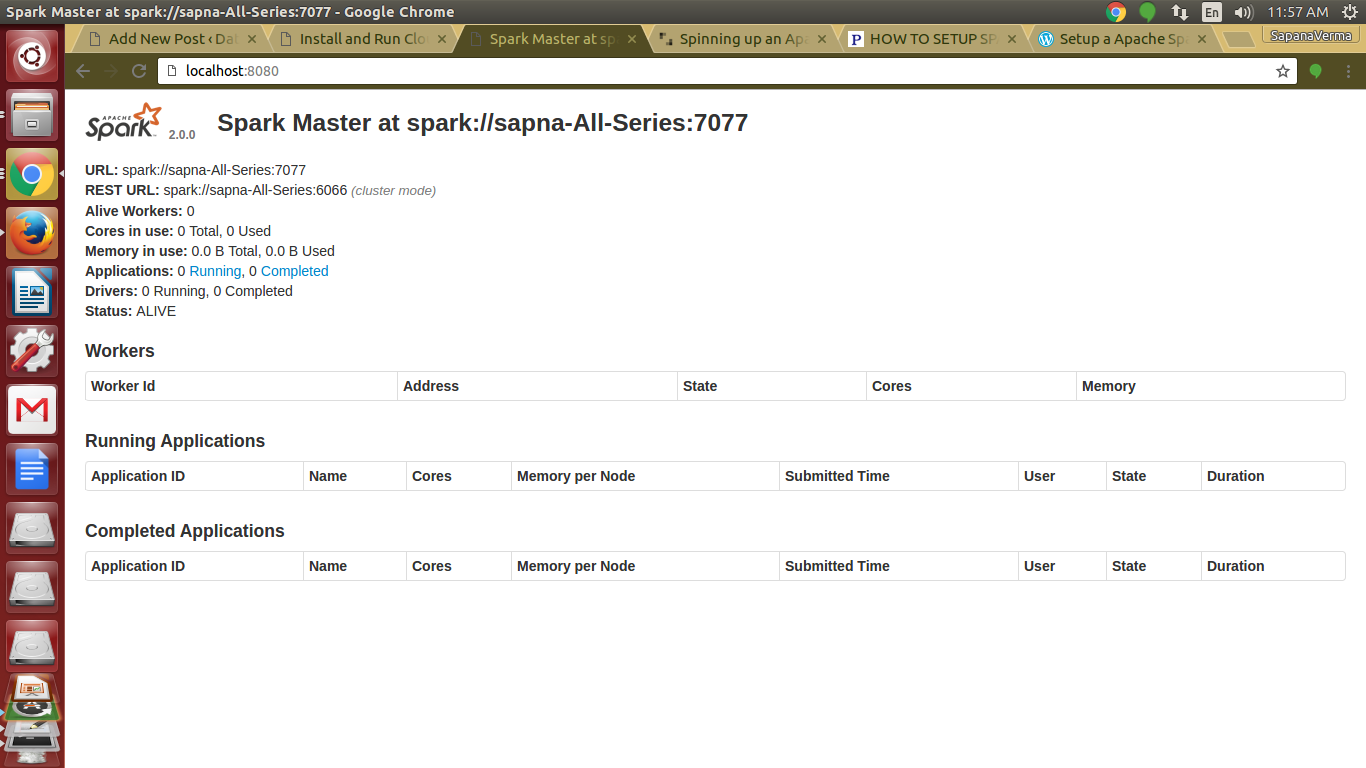

Master Node Start Output: cat /moudle/spark-3.0.1/logs/. The Spark Cluster page sets the port to 8080, but looking at the startup output shows that the actual startup port is 8081.Therefore, the browser address to access the Spark cluster page is: Master node IP address + port number, for example: 192.168.239.131::8081. Starting .history.HistoryServer, logging to /moudle/spark-3.0.1/logs/. #Launch the output through history-server to find the browser address to view the spark application #bigdata1 Node Starts Log conf]# start-history-server.sh Start Spark #Start Spark with one conf]# start-spark-all.sh #Activate bigdata2 and bigdata3 node system environment variablesĩ. Send bigdata1 node/etc/profile to bigdata2 and bigdata3 nodes scp /etc/profile bigdata2:/etc/profile Send bigdata1 node Spark to bigdata2 and bigdata3 nodes scp -r spark-3.0.1 bigdata2:/moudleĨ. Configuring Spark system variables vim /etc/profileĮxport PATH=$PATH:$SPARK_HOME/sbin:$SPARK_HOME/bin:$PATHĮxport PYTHON_PATH=$SPARK_HOME/python:$SPARK_HOME/python/lib/py4j-0.10.7-src.zip:$PYTHON_PATHīecause there is also start-under avoid conflicts, rename the start-stop file for spark. Configuration from Node cp slaves.template slavesĥ.

was initially specified wasHdfs://hadoop000: 8020/directory, then modified toHdfs://hadoop000: 8020/directory2, specifiesHdfs://hadoop000: 8020/directory, only the log information of all applications running in that directory can be displayed. logDirectory: The Spark History Server page only shows information under that specified path : All the information of the Application is recorded in the path specified by this property during the running process Spark.eventLogThe starting configuration is saved in nfConfiguration file.Ģ) is the difference between a specified directory? # hdfs://bigdata1:8020/sparklogġ): Default value: false, whether to log Spark events for application to rebuild webUI after completion.Ģ): Default value: file:///tmp/spark-events, path to save log-related information, can be HDFS path starting with hdfs://or local path starting with file://need to be created in advance.ġ)Spark.historyThe starting configuration is saved in spark-Env.shSPARK_inHISTORY_In OPTS. #Paths to store log-related information, either HDFS paths starting with hdfs://or local paths starting with file://need to be created in advance #Whether to log Spark events for application to refactor webUI after completion

If this value is exceeded, the old application information will be deleted and the page will need to be rebuilt when the deleted application information is accessed again.ģ).logDirectory: The Spark History Server page only shows information under that specified path 3. logDirectory=hdfs://bigdata1:8020/sparklog"ġ).port: Default value: 18080, the web port of HistoryServer.This is modified to 4000.Ģ): The default value is 50, the number of Application History saved in memory. #Add the following at the end of the fileĮxport HADOOP_CONF_DIR=/moudle/hadoop-3.3.0/etc/hadoopĮxport SPARK_HISTORY_OPTS=".port=4000

Vim /moudle/spark-3.0.1/conf/spark-env.sh Unzip installation package tar -zxvf spark-3.0.1.tgz -C /moudle

Install apache spark standalone download#

Spark-3.0.1-bin-hadoop3.2 Download Address:

0 kommentar(er)

0 kommentar(er)